Mixture of Gaussians Best Described by Generative Model

Gmr is a Python library fo r Gaussian mixture regression GMR. It is a universally used model for generative unsupervised learning or clustering.

Gaussian Mixture Model Gmm Best Practices

The probability density function of the movMF generative model is given by fxj Xk h1.

. Even if non-parametric methods are used for spike sorting such generative models provide a quantitative measure of unit isolation quality. But since there are K such clusters and the probability. 1 Introduction The mixture of Gaussians is among the most enduring well-weathered models of applied statistics.

One first generates a discrete variable that determines which of the component Gaussians to use and then generates an observation from the chosen density. Chronic extracellular recordings are a powerful tool for systems neuroscience but spike sorting remains a challenge. With probability 07 choose component 1 otherwise choose component 2 If we chose component 1 then sample xfrom a Gaussian with mean 0 and standard deviation 1.

It runs in time only linear in the dimension of the data and polynomial in the number of Gaussians. We simply restricted the generative model to exhibit a specific behavior which indirectly led to the modified-M2 deciding that having 10 different data-generating manifolds is a good thing. Gaussian Mixture Models Assume data came from mixture of Gaussianselliptical data Assign data to cluster with certain probabilitysoft clustering Very similar at high rlevel to k rmeans.

Finally we integrate our model with the k-nearest neighbor graph to capture. The Real Gaussian. Gaussian Mixture Model.

It is particularly well suited to describe data containing clusters. You have to use a non-degenerate mixture of Gaussians or I will cut you. The Gaussian Mixture Models or Mixture of Gaussians models a convex combination of the various distributions.

P x μ σ 2 N μ σ 2 1 2 π σ 2 exp x μ 2 2 σ 2. A Gaussian distribution is a continuous probability distribution that is characterized by its symmetrical bell shape. GMR is a regression approach.

Gaussian Mixture Model or Mixture of Gaussian as it is sometimes called is not so much a model as it is a probability distribution. Olshausen November 1 2010 The mixture of Gaussians model is probably the simplest interesting example of a generative model that illustrates the principles of inference and learning. Our model assumes a Conditional Mixture-of-Gaussians prior on the latent representation of the data.

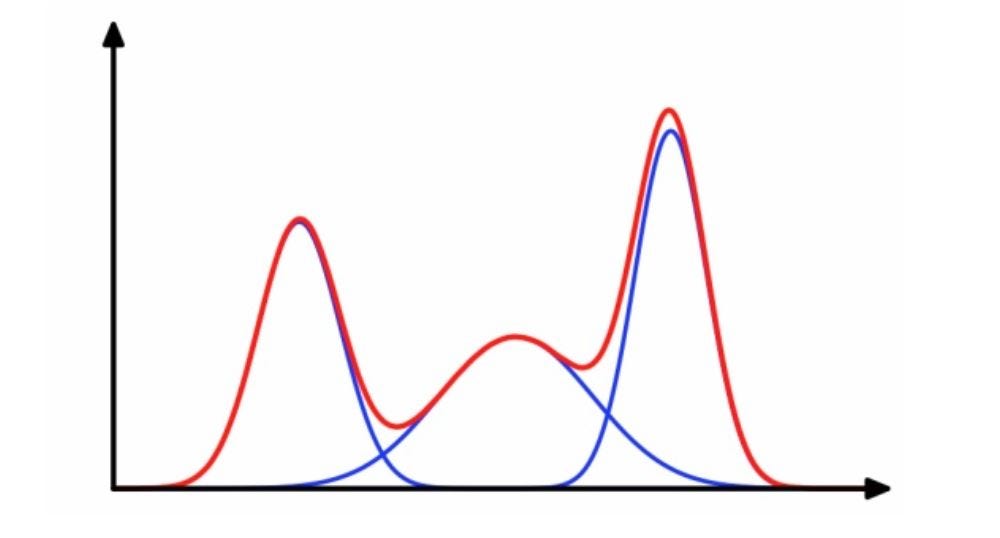

A Gaussian Mixture describes a random variable whose PDF could come from one of two Gaussians or more but we will just use two in this demo. A mixture of Gaussians is best described in terms of the generative model. K with ˇ k themixing coe cients where.

A common approach is to fit a generative model such as a mixture of Gaussians to the observed spike data. Gaussian Mixture Model Sham Kakade 2016 3 Most commonly used mixture model Observations. Iterate between assigning examples and updating cluster centers k rmeans GMMs pblue 08 pred 02 pblue 09 pred 01.

The Gaussian Mixture Model is a generative model that assumes that data are generated from multiple Gaussion distributions each with own Mean and variance. There is a certain probability the sample will come from the first gaussian otherwise it comes from the second. These preferences are expressed as Bayesian prior probabilities with varying degrees of uncertainty.

One can think of mixture models as generalizing k-means clustering to incorporate information about the covariance structure of the data as well as the centers of the latent Gaussians. A gaussian-mixture is the special case where the components are Gaussians. A mixture distribution combines different component distributions with weights that typically sum to one or can be renormalized.

In other words the mixing coefficient for each Gaussian should add up to one. Country of origin height of ith person kth mixture component distribution of heights in country k. It has the following generative process.

That models probability distributions rather than functions. Gaussian Mixture Model GMM AGaussian mixture modelrepresents a distribution as px XK k1 ˇ kNxj k. Generative models explanation or prediction inference.

We know that neural nets are universal approximators of functions. Naive Bayes Hidden Markov Model Mixture Gaussian Markov. Had it been only one distribution they would have been estimated by the maximum-likelihood method.

Maximum Likelihood and Maximum a Posteriori The model parameters θ that make the data most probable are called the. So and is also estimated for each k. Centers of the Gaussians to within the precision specified by the user with high probability.

The key point here is that the combination is convex. Generative Models Generative models Modeling the joint probabilistic distribution of data Given some hidden parameters or variables Then do the conditional inference Recover the data distribution essence of data science Benefit from hidden variables modeling Eg. Suppose there are K clusters For the sake of simplicity here it is assumed that the number of clusters is known and it is K.

XK k1 ˇ k 1 and ˇ k 0 8k GMM is a density estimator Where have we already used a density estimator. That is a Gaussian Mixture Model conditioned on the users clustering preferences based eg. Hence it is possible to mo del.

The mixture of Gaussians model Bruno A. Px μ σ2 Nμ σ2 1 2πσ2exp x μ2 2σ2. For instance here is a mixture of 25 N 2 1 and 75 N 2 1 which you could call one part N 2 1 and three parts N 2 1.

A univariate Gaussian distribution is defined as follows. Building up to the Mixture of Gaussians Single Gaussians Fully-Observed Mixtures Hidden Mixtures. Then a DP-based mixture model with constrained Mixture of Gaussians MoG is constructed to handle the manifold data.

Surprisingly we made no change to the inference model at all. A Gaussian mixture model is a probabilistic model that assumes all the data points are generated from a mixture of a finite number of Gaussian distributions with unknown parameters. Sample a class index from a categorical distribution to determine the class in which the data point will belong.

Figure 2 shows an example of a mixture of Gaussians model with 2 components. If we consider GMM to be a generative model then we can imagine the generating process as follows. In this section we introduce a mixture of kvMF movMF distributions as a generative model for directional data and then derive the mixture-density parameter estimation up-date equations from a given data set using the expectation maximization EM framework.

An example of a univariate mixture of Gaussians model.

Ml From Scratch Part 5 Gaussian Mixture Models Oranlooney Com

Gaussian Mixture Models Visually Explained Anastasia Kireeva S Blog

Ml From Scratch Part 5 Gaussian Mixture Models Oranlooney Com

Comments

Post a Comment